Ivan Stepanov and Artem Voronov. One-Point Gradient-Free Methods for Composite Optimization with Applications to Distributed Optimization

This work is devoted to solving the composite optimization problem with the mixture oracle: for the smooth part of the problem, we have access to the gradient, and for the non-smooth part, only to the one-point zero-order oracle. For such a setup, we present a new method based on the sliding algorithm. Our method allows to separate the oracle complexities and compute the gradient for one of the function as rarely as possible. The paper also present the applicability of our new method to the problems of distributed optimization and federated learning. Experimental results confirm the theory.

arxiv

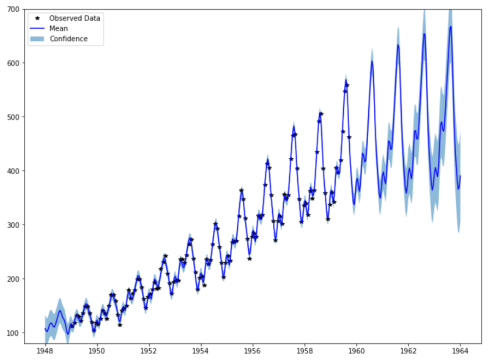

Sergey Skorik. Gaussian regression

Sergey did a lot of work, investigated Gaussian regression from a theoretical point of view, and also built a model for predicting the number of flight passengers.

GitHub